Story Highlights

- AI can do real, measurable harm if applied unethically

- Leaders need to predict the unintended consequences of their AI

- To mitigate risk, make ethics an inherent part of culture

This article is the fifth in a series about how business leaders can become better prepared for managing the AI disruption.

AI is great at cognitive thinking but terrible at ethical thinking. So bad at ethical judgment, in fact, that questions of ethics are likely to remain one of the most challenging aspects of developing large-scale commercial applications of AI.

The ethical and moral implications of AI can impact business, society, or both at the same time. Consider the Google employees who resigned -- and the thousands who co-signed a letter to their CEO -- in protest of Pentagon-funded projects. Or the questions around legal culpability for driverless car accidents. Or the machine-learning models that amplify gender bias and racism, upping the odds of unfair hiring practices -- and future litigation.

Ethical issues like these will prompt an infinite number of judgment calls. And AI has the strange ability to make ethical calls both philosophically abstract yet uncomfortably personal. For instance, AI is going to end some jobs -- whose will be the first to go? And what should be done with the people who used to do what AI now does better? What about clients who make questionable decisions with the tech they buy from you? How do we combat racism in AI? Can we treat robots humanely? Should we?

The sheer potential for both the good and harmful effects AI can have on individuals and societies requires us to be extremely serious about the unintended consequences -- and the intended ones, too.

Organizations need to go beyond a culture of compliance and build cultures of "doing the right thing" for its own sake. And AI makes "the right thing" a more far-reaching, subjective and abstract concept all the time.

Good Character Isn't Enough

A culture of compliance relies on adherence to clearly defined rules and guidelines. Often, these rules are determined by an external regulating body -- a government authority, an industry association, etc. These rules take a long time to develop and longer still to adopt.

With the accelerating speed of technological development, regulators will be less and less able to keep up and produce constructive ethical guidelines. There just isn't time -- tech moves too fast. For instance, in America, health insurers and employers aren't allowed to use genetic information to inform their decisions. But long-term care facility operators and life insurers are. By the time U.S. law has fully considered the issue, AI may well have moved so far ahead that the question is obsolete.

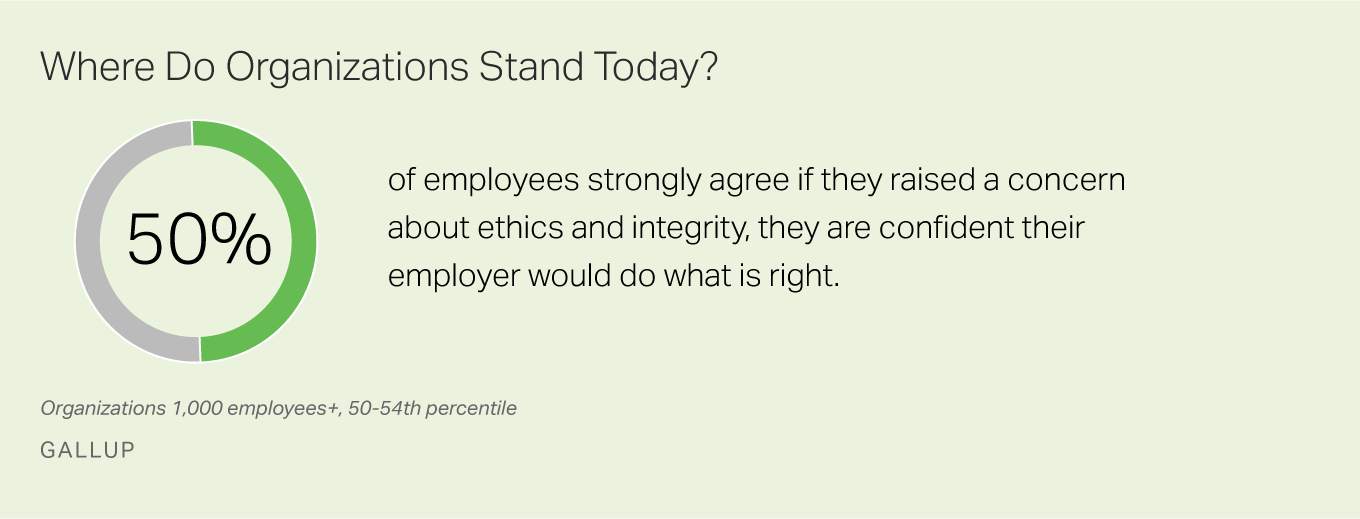

Businesses will not be able to rely on external regulations to determine what "right" looks like. Instead, they need to rely on the ethical and moral reasoning of their employees and use that resource to activate business decisions in real time.

In practice, this means leaders need to move beyond the idea that ethical business conduct is a matter of good character, or something the compliance department runs. For an AI-based business to be ethical and, thus, sustainable, everyone needs to be on the ethics team.

But are your people ready? Can they reliably perform moral reasoning in a complex environment? Do they have any incentive to? And do they recognize the stakes of the situation? Probably not -- most of us don't have our moral compasses on when we most need them.

Activate the Moral Compass

Researchers of "bounded ethicality" provide a growing body of evidence proving that good people do bad things all the time but not deliberately. Behavior depends less on how good of a person you are and more on the situation and a person's level of moral awareness. But a heightened state of moral awareness is so psychologically taxing and impractical that most of us keep our moral compasses in sleep mode. This is exactly why good people are at the most risk of doing bad things.

To mitigate the risk, organizations need to create a culture that puts ethics front and center. After-the-fact controls and reviews will have less power to control damage, especially further down the value chain. When it comes to ethics, good will have to be done in real time as part of daily business decision-making, alongside considerations of profitability and customer and shareholder value.

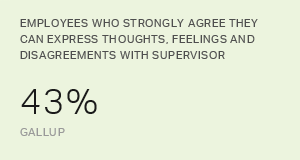

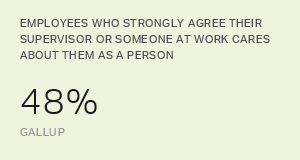

For most organizations, that requires a culture shift and a serious capability building effort touching every single team and decision-maker -- way beyond annual compliance training and poster campaigns. Many companies are already behind: Gallup's recent survey in Europe found that fewer than 40% of employees in Germany, the UK, France and Spain can strongly agree that they believe their company "would never lie to our customers or conceal information that is relevant to them."

Navigate the Network Effect

AI-based business models are going to make this need even more acute. Decisions will arise in complex conditions where there's not enough training data for AI, or situations so serious that human supervision is necessary, or when the cost of a wrong decision is too high to justify the algorithm's standard margin of error. In such cases, very fallible humans will have to step forward and make the call based on their own moral judgment.

Meanwhile, the network effect extends the range of leaders' decisions. By offering businesses a tremendous opportunity to scale, AI is likely to bring new power to the current trend of platform-based businesses monopolizing their business niche -- as Google, Facebook and Amazon demonstrate. The network effect magnifies the consequences of every decision made at the core of the business and can touch many, many customers.

For example, Facebook's policy of allowing apps to harvest certain kinds of data without the customers' knowledge enabled Cambridge Analytica to gain access to the data of at least 30 million users. Cambridge Analytica used that data improperly, and now regulators all over the world are investigating Facebook, its CEO was called to testify before the U.S. Congress, the company lost $100 billion in market value, and may be forced to accept regulations that conflict with its business model.

The business decisions we make -- the ones too important for AI to make -- are going to be more far-reaching and have a greater impact all the time. Every person involved in decision-making needs to manage their own bounded ethicality.

But then, as the Google example shows, ethics questions can impact all business functions, including human resource operations. Google lost a dozen workers, presumably to its competitors -- good IT workers rarely have to look far for a job in Silicon Valley -- and thousands more Google employees are questioning the ethics and morals of their leaders. Gallup research suggests that may have a negative effect on their engagement, and with that, their productivity. Workplace problems like Google's aren't always based on AI, but they are related to its use.

Companies will need a whole new risk-evaluation lens, and everyone in the company must view their work through it. One of those risks will be the risk of alienating employees and customers with the AI choices leaders make.

Trust is a substantial asset in that endeavor. Individual contributors need to trust their leaders to do the right thing, and leaders need to trust their workers. In fact, trust is so vital to the productive use of AI that it's the topic of the next article in this series.

Consider the following questions to probe your organization's readiness for the AI-powered business environment:

- To what extent do initiatives on business ethics in your organization go beyond compliance with regulations and help employees be mindful of doing the right thing in their daily work?

- Are decision-makers in your organization equipped with practical approaches for addressing the challenges of "bounded ethicality" (good people doing bad things) in their daily work?

- Does your performance management system reflect an ongoing focus on doing the right thing?

Read the first, second, third and fourth articles in this compelling seven-part series. The next article will discuss the steps business leaders can take to create a culture of trust in the AI era.

Find out how your company can create a performance management system that helps your employees become better prepared for the AI era:

- Download Gallup's perspective paper on culture to learn how to build a business that's ready for the future.

- Examine what Americans think about -- and what they can do to confront -- the AI revolution.

- Register for a Gallup course to learn more about the five conversations that drive performance.

Jennifer Robison contributed to this article.